本文介绍了 Claude 开发者平台上的全新上下文管理功能:上下文编辑和记忆工具,它们由 Claude Sonnet 4.5 提供增强支持。这些功能旨在克服 AI 代理中上下文窗口的限制,使其能够处理复杂的长时间运行任务,同时避免性能下降或关键信息丢失。当接近 Token 限制时,上下文编辑会自动从上下文窗口中移除过时的工具调用和结果,从而专注于相关数据,保持对话流程并提升模型性能。记忆工具提供了一个基于文件的客户端系统,供 Claude 在上下文窗口之外存储和检索信息,使代理能够构建知识库、维护项目状态以及跨会话引用历史学习内容。Sonnet 4.5 内置的上下文感知能力增强了这两种功能。性能评估显示,结合使用这些功能后,在复杂的搜索任务中,代理性能提升了 39%;仅使用上下文编辑一项,就在 100 轮网络搜索中减少了 84% 的 Token 消耗。这些更新有助于开发强大的长时间运行代理,适用于编码、研究和数据处理等多种场景。

Today, we’re introducing new capabilities for managing your agents’ context on the Claude Developer Platform: context editing and the memory tool.

With our latest model, Claude Sonnet 4.5, these capabilities enable developers to build AI agents capable of handling long-running tasks at higher performance and without hitting context limits or losing critical information.

Context windows have limits, but real work doesn’t

As production agents handle more complex tasks and generate more tool results, they often exhaust their effective context windows—leaving developers stuck choosing between cutting agent transcripts or degrading performance. Context management solves this in two ways, helping developers ensure only relevant data stays in context and valuable insights get preserved across sessions.

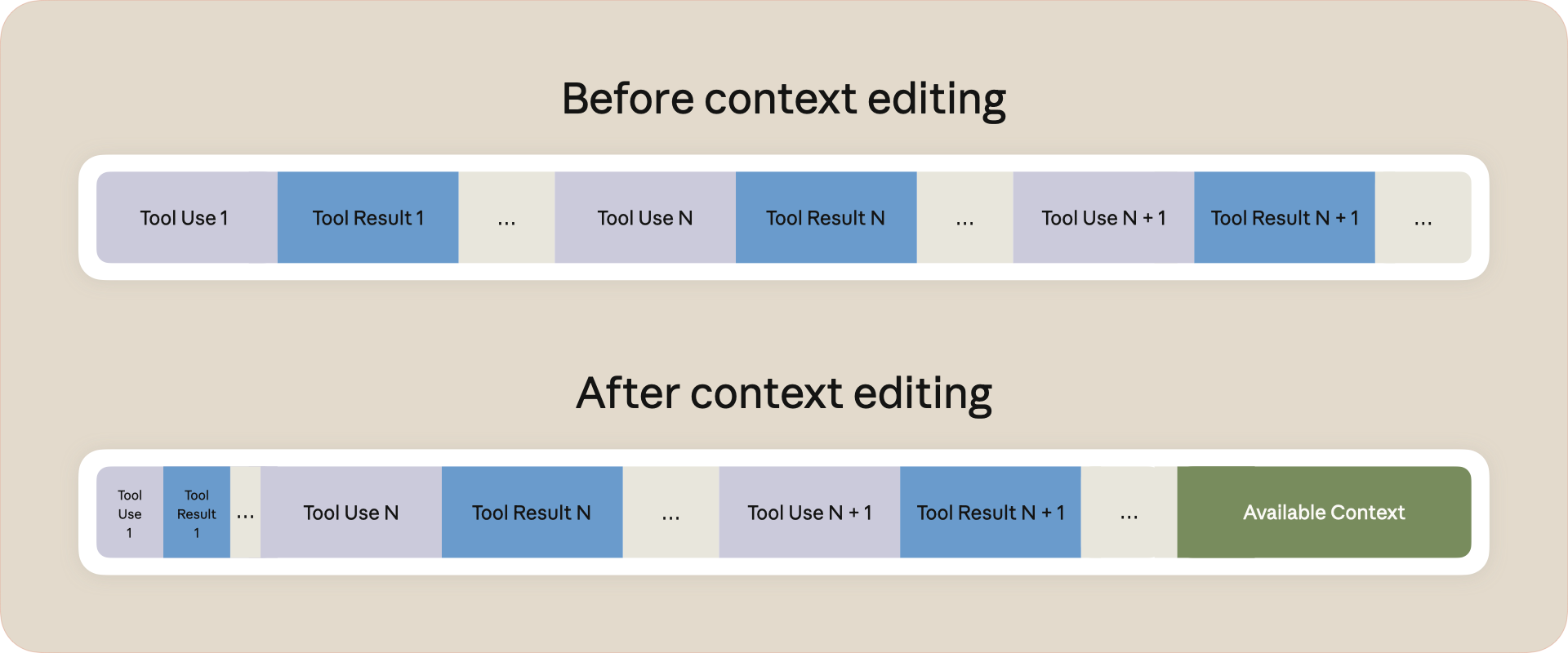

Context editing automatically clears stale tool calls and results from within the context window when approaching token limits. As your agent executes tasks and accumulates tool results, context editing removes stale content while preserving the conversation flow, effectively extending how long agents can run without manual intervention. This also increases the effective model performance as Claude focuses only on relevant context.

The memory tool enables Claude to store and consult information outside the context window through a file-based system. Claude can create, read, update, and delete files in a dedicated memory directory stored in your infrastructure that persists across conversations. This allows agents to build up knowledge bases over time, maintain project state across sessions, and reference previous learnings without having to keep everything in context.

The memory tool operates entirely client-side through tool calls. Developers manage the storage backend, giving them complete control over where the data is stored and how it’s persisted.

Claude Sonnet 4.5 enhances both capabilities with built-in context awareness—tracking available tokens throughout conversations to manage context more effectively.

Together, these updates create a system that improves agent performance:

- Enable longer conversations by automatically removing stale tool results from context

- Boost accuracy by saving critical information to memory—and bring that learning across successive agentic sessions

Building long-running agents

Claude Sonnet 4.5 is the best model in the world for building agents. These features unlock new possibilities for long-running agents—processing entire codebases, analyzing hundreds of documents, or maintaining extensive tool interaction histories. Context management builds on this foundation, ensuring agents can leverage this expanded capacity efficiently while still handling workflows that extend beyond any fixed limit. Use cases include:

- Coding: Context editing clears old file reads and test results while memory preserves debugging insights and architectural decisions, enabling agents to work on large codebases without losing progress.

- Research: Memory stores key findings while context editing removes old search results, building knowledge bases that improve performance over time.

- Data processing: Agents store intermediate results in memory while context editing clears raw data, handling workflows that would otherwise exceed token limits.

Performance improvements with context management

On an internal evaluation set for agentic search, we tested how context management improves agent performance on complex, multi-step tasks. The results demonstrate significant gains: combining the memory tool with context editing improved performance by 39% over baseline. Context editing alone delivered a 29% improvement.

In a 100-turn web search evaluation, context editing enabled agents to complete workflows that would otherwise fail due to context exhaustion—while reducing token consumption by 84%.

Getting started

These capabilities are available today in public beta on the Claude Developer Platform, natively and in Amazon Bedrock and Google Cloud’s Vertex AI. Explore the documentation for context editing and the memory tool, or visit our cookbook to learn more.

Anthropic is not affiliated with, endorsed by, or sponsored by CATAN GmbH or CATAN Studio. The CATAN trademark and game are the property of CATAN GmbH.